Erik Brynjolfsson, professeur au MIT Sloan School of Management, dans son dernier livre «Race Against The Machine », co-écrit avec Andrew McAfee, pose ces questions : peut-on rivaliser avec les innovations technologiques qui créent les robots ? Peut-on accepter le danger qu’ils représentent sur le plan humain dans la tranformation de la maion d’oeuvre et le travail ? Comment la révolution numérique accélère l’innovation, moteur de la productivité, et irréversiblement transforme l’emploi et l’économie ?

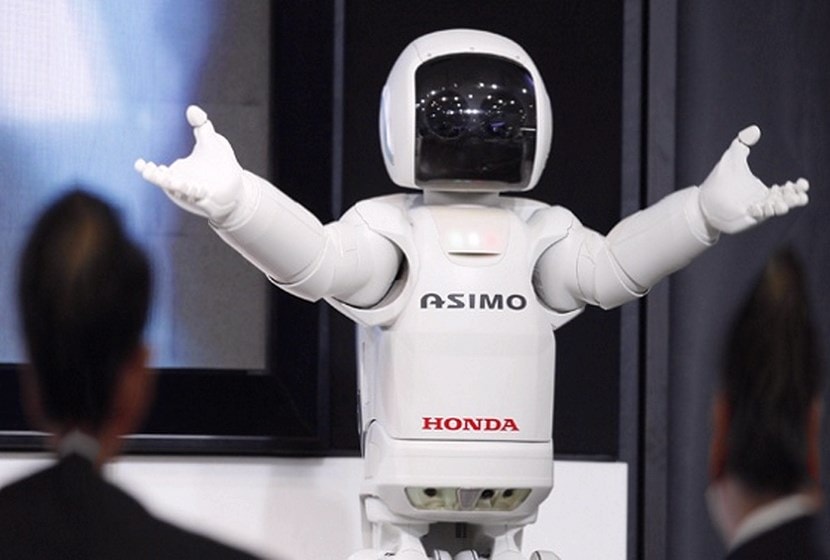

Photo : Visiteurs regardant un robot de Honda Asimo au siège de Honda Motor Co. à Tokyo. Canon Inc se dirige vers l’automatisation complète de production d’appareils photo numériques dans le but de réduire les coûts. (AP)

Selon Erik brynjolfsson, les ordinateurs prennent non seulement une grande partie de notre temps, mais aussi nos emplois. Exemples :

Selon Erik brynjolfsson, les ordinateurs prennent non seulement une grande partie de notre temps, mais aussi nos emplois. Exemples :

Le fournisseur d’Apple, Foxconn, a récemment annoncé des plans sociaux pour remplacer une grande partie de sa main-d’œuvre par plus d’un million de robots d’ici quelques années.

La chaîne de pharmacies CVS Caremark Corp aux USA, entre autres, a remplacé ses caissières par un ordinateur de caisse libre-service pour économiser sur les coûts de main-d’œuvre.

Le site de sport Stats Monkey utilise des robots pour écrire certains de ses articles à la place des journalistes.

Erik Brynjolfsson affirme que ces changements sont dus à la technologie qui évolue rapidement : «La technologie s’est améliorée de façon exponentielle, et il est normall d’arriver à de tels changement si étonnants, année après année. Même les technologues sont pris au dépourvu.»

Le côté positif, dit-il, est que la productivité permet d’améliorer l’économie. Mais l’inconvénient est que les gains vont toujours à une partie réduite de la population.

Brynjolffsson explique comment l’automatisation provoque un changement radical sur la main-d’œuvre et comment la révolution numérique accélère l’innovation, moteur de la productivité, en transformant irréversiblement l’emploi et l’économie.

Extraits du livre « Race Against The Machine », par Erik Brynjolfsson

Extraits du livre « Race Against The Machine », par Erik Brynjolfsson

« Toute technologie suffisamment avancée est indiscernable de la magie ». Arthur C. Clarke, 1962

We used to be pretty confident that we knew the relative strengths and weaknesses of computers vis-à-vis humans. But computers have started making inroads in some unexpected areas. This fact helps us to better understand the past few turbulent years and the true impact of digital technologies on jobs.

A good illustration of how much recent technology advances have taken us by surprise comes from comparing a carefully researched book published in 2004 with an announcement made in 2010. The book is The New Division of Labor by economists Frank Levy and Richard Murnane. As its title implies, it’s a description of the comparative capabilities of computers and human workers.

In the book’s second chapter, « Why People Still Matter, » the authors present a spectrum of information-processing tasks. At one end are straightforward applications of existing rules.

These tasks, such as performing arithmetic, can be easily automated. After all, computers are good at following rules.

At the other end of the complexity spectrum are pattern-recognition tasks where the rules can’t be inferred. The New Division of Labor gives driving in traffic as an example of this type of task, and asserts that it is not automatable:

The … truck driver is processing a constant stream of [visual, aural, and tactile] information from his environment. … To program this behavior we could begin with a video camera and other sensors to capture the sensory input. But executing a left turn against oncoming traffic involves so many factors that it is hard to imagine discovering the set of rules that can replicate a driver’s behavior. …

Articulating [human] knowledge and embedding it in software for all but highly structured situations are at present enormously difficult tasks. … Computers cannot easily substitute for humans in [jobs like truck driving].

The results of the first DARPA Grand Challenge, held in 2004, supported Levy and Murnane’s conclusion. The challenge was to build a driverless vehicle that could navigate a 150-mile route through the unpopulated Mohave Desert. The « winning » vehicle couldn’t even make it eight miles into the course and took hours to go even that far.

In Domain After Domain, Computers Race Ahead

Just six years later, however, real-world driving went from being an example of a task that couldn’t be automated to an example of one that had. In October of 2010, Google announced on its official blog that it had modified a fleet of Toyota Priuses to the point that they were fully autonomous cars, ones that had driven more than 1,000 miles on American roads without any human involvement at all, and more than 140,000 miles with only minor inputs from the person behind the wheel. (To comply with driving laws, Google felt that it had to have a person sitting behind the steering wheel at all times).

Levy and Murnane were correct that automatic driving on populated roads is an enormously difficult task, and it’s not easy to build a computer that can substitute for human perception and pattern matching in this domain. Not easy, but not impossible either—this challenge has largely been met.

The Google technologists succeeded not by taking any shortcuts around the challenges listed by Levy and Murnane, but instead by meeting them head-on. They used the staggering amounts of data collected for Google Maps and Google Street View to provide as much information as possible about the roads their cars were traveling. Their vehicles also collected huge volumes of real-time data using video, radar, and LIDAR (light detection and ranging) gear mounted on the car; these data were fed into software that takes into account the rules of the road, the presence, trajectory, and likely identity of all objects in the vicinity, driving conditions, and so on. This software controls the car and probably provides better awareness, vigilance, and reaction times than any human driver could. The Google vehicles’ only accident came when the driverless car was rear-ended by a car driven by a human driver as it stopped at a traffic light.

None of this is easy. But in a world of plentiful accurate data, powerful sensors, and massive storage capacity and processing power, it is possible. This is the world we live in now. It’s one where computers improve so quickly that their capabilities pass from the realm of science fiction into the everyday world not over the course of a human lifetime, or even within the span of a professional’s career, but instead in just a few years.

Levy and Murnane give complex communication as another example of a human capability that’s very hard for machines to emulate. Complex communication entails conversing with a human being, especially in situations that are complicated, emotional, or ambiguous. Evolution has « programmed » people to do this effortlessly, but it’s been very hard to program computers to do the same. Translating from one human language to another, for example, has long been a goal of computer science researchers, but progress has been slow because grammar and vocabulary are so complicated and ambiguous.

In January of 2011, however, the translation services company Lionbridge announced pilot corporate customers for GeoFluent, a technology developed in partnership with IBM. GeoFluent takes words written in one language, such as an online chat message from a customer seeking help with a problem, and translates them accurately and immediately into another language, such as the one spoken by a customer service representative in a different country.

GeoFluent is based on statistical machine translation software developed at IBM’s Thomas J. Watson Research Center. This software is improved by Lionbridge’s digital libraries of previous translations. This « translation memory » makes GeoFluent more accurate, particularly for the kinds of conversations large high-tech companies are likely to have with customers and other parties. One such company tested the quality of GeoFluent’s automatic translations of online chat messages. These messages, which concerned the company’s products and services, were sent by Chinese and Spanish customers to English-speaking employees. GeoFluent instantly translated them, presenting them in the native language of the receiver. After the chat session ended, both customers and employees were asked whether the automatically translated messages were useful—whether they were clear enough to allow the people to take meaningful action. Approximately 90% reported that they were. In this case, automatic translation was good enough for business purposes.

The Google driverless car shows how far and how fast digital pattern recognition abilities have advanced recently. Lionbridge’s GeoFluent shows how much progress has been made in computers’ ability to engage in complex communication. Another technology developed at IBM’s Watson labs, this one actually named Watson, shows how powerful it can be to combine these two abilities and how far the computers have advanced recently into territory thought to be uniquely human.

Watson is a supercomputer designed to play the popular game show Jeopardy! in which contestants are asked questions on a wide variety of topics that are not known in advance.1 In many cases, these questions involve puns and other types of wordplay. It can be difficult to figure out precisely what is being asked, or how an answer should be constructed. Playing Jeopardy! well, in short, requires the ability to engage in complex communication.

The way Watson plays the game also requires massive amounts of pattern matching. The supercomputer has been loaded with hundreds of millions of unconnected digital documents, including encyclopedias and other reference works, newspaper stories, and the Bible. When it receives a question, it immediately goes to work to figure out what is being asked (using algorithms that specialize in complex communication), then starts querying all these documents to find and match patterns in search of the answer. Watson works with astonishing thoroughness and speed, as IBM research manager Eric Brown explained in an interview:

We start with a single clue, we analyze the clue, and then we go through a candidate generation phase, which actually runs several different primary searches, which each produce on the order of 50 search results. Then, each search result can produce several candidate answers, and so by the time we’ve generated all of our candidate answers, we might have three to five hundred candidate answers for the clue.

Now, all of these candidate answers can be processed independently and in parallel, so now they fan out to answer-scoring analytics [that] score the answers. Then, we run additional searches for the answers to gather more evidence, and then run deep analytics on each piece of evidence, so each candidate answer might go and generate 20 pieces of evidence to support that answer.

Now, all of this evidence can be analyzed independently and in parallel, so that fans out again. Now you have evidence being deeply analyzed … and then all of these analytics produce scores that ultimately get merged together, using a machine-learning framework to weight the scores and produce a final ranked order for the candidate answers, as well as a final confidence in them. Then, that’s what comes out in the end.

What comes out in the end is so fast and accurate that even the best human Jeopardy! players simply can’t keep up. In February of 2011, Watson played in a televised tournament against the two most accomplished human contestants in the show’s history. After two rounds of the game shown over three days, the computer finished with more than three times as much money as its closest flesh-and-blood competitor. One of these competitors, Ken Jennings, acknowledged that digital technologies had taken over the game of Jeopardy! Underneath his written response to the tournament’s last question, he added, « I for one welcome our new computer overlords. »

Moore’s Law and the Second Half of the Chessboard

Where did these overlords come from? How has science fiction become business reality so quickly? Two concepts are essential for understanding this remarkable progress. The first, and better known, is Moore’s Law, which is an expansion of an observation made by Gordon Moore, co-founder of microprocessor maker Intel. In a 1965 article in Electronics Magazine, Moore noted that the number of transistors in a minimum-cost integrated circuit had been doubling every 12 months, and predicted that this same rate of improvement would continue into the future. When this proved to be the case, Moore’s Law was born.

Later modifications changed the time required for the doubling to occur; the most widely accepted period at present is 18 months. Variations of Moore’s Law have been applied to improvement over time in disk drive capacity, display resolution, and network bandwidth. In these and many other cases of digital improvement, doubling happens both quickly and reliably.

It also seems that software progresses at least as fast as hardware does, at least in some domains. Computer scientist Martin Grötschel analyzed the speed with which a standard optimization problem could be solved by computers over the period 1988-2003. He documented a 43 millionfold improvement, which he broke down into two factors: faster processors and better algorithms embedded in software. Processor speeds improved by a factor of 1,000, but these gains were dwarfed by the algorithms, which got 43,000 times better over the same period.

The second concept relevant for understanding recent computing advances is closely related to Moore’s Law. It comes from an ancient story about math made relevant to the present age by the innovator and futurist Ray Kurzweil. In one version of the story, the inventor of the game of chess shows his creation to his country’s ruler. The emperor is so delighted by the game that he allows the inventor to name his own reward. The clever man asks for a quantity of rice to be determined as follows: one grain of rice is placed on the first square of the chessboard, two grains on the second, four on the third, and so on, with each square receiving twice as many grains as the previous.

The emperor agrees, thinking that this reward was too small. He eventually sees, however, that the constant doubling results in tremendously large numbers. The inventor winds up with 264 -1 grains of rice, or a pile bigger than Mount Everest. In some versions of the story the emperor is so displeased at being outsmarted that he beheads the inventor.

In his 2000 book The Age of Spiritual Machines: When Computers Exceed Human Intelligence, Kurzweil notes that the pile of rice is not that exceptional on the first half of the chessboard:

After thirty-two squares, the emperor had given the inventor about 4 billion grains of rice. That’s a reasonable quantity—about one large field’s worth—and the emperor did start to take notice.

But the emperor could still remain an emperor. And the inventor could still retain his head. It was as they headed into the second half of the chessboard that at least one of them got into trouble.

Kurzweil’s point is that constant doubling, reflecting exponential growth, is deceptive because it is initially unremarkable. Exponential increases initially look a lot like standard linear ones, but they’re not. As time goes by—as we move into the second half of the chessboard—exponential growth confounds our intuition and expectations. It accelerates far past linear growth, yielding Everest-sized piles of rice and computers that can accomplish previously impossible tasks.

So where are we in the history of business use of computers? Are we in the second half of the chessboard yet? This is an impossible question to answer precisely, of course, but a reasonable estimate yields an intriguing conclusion. The U.S. Bureau of Economic Analysis added « Information Technology » as a category of business investment in 1958, so let’s use that as our starting year. And let’s take the standard 18 months as the Moore’s Law doubling period. Thirtytwo doublings then take us to 2006 and to the second half of the chessboard. Advances like the Google autonomous car, Watson the Jeopardy! champion supercomputer, and high-quality instantaneous machine translation, then, can be seen as the first examples of the kinds of digital innovations we’ll see as we move further into the second half—into the phase where exponential growth yields jaw-dropping results.

Computing the Economy : the Economic Power of General Purpose Technologies

These results will be felt across virtually every task, job, and industry. Such versatility is a key feature of general purpose technologies (GPTs), a term economists assign to a small group of technological innovations so powerful that they interrupt and accelerate the normal march of economic progress. Steam power, electricity, and the internal combustion engine are examples of previous GPTs.

It is difficult to overstate their importance. As the economists Timothy Bresnahan and Manuel Trajtenberg note :

Whole eras of technical progress and economic growth appear to be driven by … GPTs, [which are] characterized by pervasiveness (they are used as inputs by many downstream sectors), inherent potential for technical improvements, and « innovational complementarities, » meaning that the productivity of R&D in downstream sectors increases as a consequence of innovation in the GPT. Thus, as GPTs improve they spread throughout the economy, bringing about generalized productivity gains.

GPTs, then, not only get better themselves over time (and as Moore’s Law shows, this is certainly true of computers), they also lead to complementary innovations in the processes, companies, and industries that make use of them. They lead, in short, to a cascade of benefits that is both broad and deep.

Computers are the GPT of our era, especially when combined with networks and labeled « information and communications technology » (ICT). Economists Susanto Basu and John Fernald highlight how this GPT allows departures from business as usual.

The availability of cheap ICT capital allows firms to deploy their other inputs in radically different and productivity-enhancing ways. In so doing, cheap computers and telecommunications equipment can foster an ever-expanding sequence of complementary inventions in industries using ICT.

Note that GPTs don’t just benefit their « home » industries. Computers, for example, increase productivity not only in the high-tech sector but also in all industries that purchase and use digital gear. And these days, that means essentially all industries; even the least IT-intensive American sectors like agriculture and mining are now spending billions of dollars each year to digitize themselves.

Note also the choice of words by Basu and Fernald: computers and networks bring an ever expanding set of opportunities to companies. Digitization, in other words, is not a single project providing one-time benefits. Instead, it’s an ongoing process of creative destruction; innovators use both new and established technologies to make deep changes at the level of the task, the job, the process, even the organization itself. And these changes build and feed on each other so that the possibilities offered really are constantly expanding.

This has been the case for as long as businesses have been using computers, even when we were still in the front half of the chessboard. The personal computer, for example, democratized computing in the early 1980s, putting processing power in the hands of more and more knowledge workers. In the mid-1990s two major innovations appeared: the World Wide Web and large-scale commercial business software like enterprise resource planning (ERP) and customer relationship management (CRM) systems. The former gave companies the ability to tap new markets and sales channels, and also made available more of the world’s knowledge than had ever before been possible; the latter let firms redesign their processes, monitor and control far-flung operations, and gather and analyze vast amounts of data.

These advances don’t expire or fade away over time. Instead, they get combined with and incorporated into both earlier and later ones, and benefits keep mounting. The World Wide Web, for example, became much more useful to people once Google made it easier to search, while a new wave of social, local, and mobile applications are just emerging. CRM systems have been extended to smart phones so that salespeople can stay connected from the road, and tablet computers now provide much of the functionality of PCs.

The innovations we’re starting to see in the second half of the chessboard will also be folded into this ongoing work of business invention. In fact, they already are. The GeoFluent offering from Lionbridge has brought instantaneous machine translation to customer service interactions. IBM is working with Columbia University Medical Center and the University of Maryland School of Medicine to adapt Watson to the work of medical diagnosis, announcing a partnership in that area with voice recognition software maker Nuance. And the Nevada state legislature directed its Department of Motor Vehicles to come up with regulations covering autonomous vehicles on the state’s roads. Of course, these are only a small sample of the myriad IT-enabled innovations that are transforming manufacturing, distribution, retailing, media, finance, law, medicine, research, management, marketing, and almost every other economic sector and business function.

Where People Still Win (at Least for Now)

Although computers are encroaching into territory that used to be occupied by people alone, like advanced pattern recognition and complex communication, for now humans still hold the high ground in each of these areas. Experienced doctors, for example, make diagnoses by comparing the body of medical knowledge they’ve accumulated against patients’ lab results and descriptions of symptoms, and also by employing the advanced subconscious pattern recognition abilities we label « intuition. » (Does this patient seem like they’re holding something back? Do they look healthy, or is something off about their skin tone or energy level?) Similarly, the best therapists, managers, and salespeople excel at interacting and communicating with others, and their strategies for gathering information and influencing behavior can be amazingly complex.

But it’s also true, as the examples in this chapter show, that as we move deeper into the second half of the chessboard, computers are rapidly getting better at both of these skills. We’re starting to see evidence that this digital progress is affecting the business world. A March 2011 story by John Markoff in the New York Times highlighted how heavily computers’ pattern recognition abilities are already being exploited by the legal industry where, according to one estimate, moving from human to digital labor during the discovery process could let one lawyer do the work of 500.

In January, for example, Blackstone Discovery of Palo Alto, Calif., helped analyze 1.5 million documents for less than $100,000. … « From a legal staffing viewpoint, it means that a lot of people who used to be allocated to conduct document review are no longer able to be billed out, » said Bill Herr, who as a lawyer at a major chemical company used to muster auditoriums of lawyers to read documents for weeks on end. « People get bored, people get headaches. Computers don’t. »

The computers seem to be good at their new jobs. … Herr … used e-discovery software to reanalyze work his company’s lawyers did in the 1980s and ’90s. His human colleagues had been only 60 percent accurate, he found.

« Think about how much money had been spent to be slightly better than a coin toss, » he said.

And an article the same month in the Los Angeles Times by Alena Semuels highlighted that despite the fact that closing a sale often requires complex communication, the retail industry has been automating rapidly.

In an industry that employs nearly 1 in 10 Americans and has long been a reliable job generator, companies increasingly are looking to peddle more products with fewer employees. … Virtual assistants are taking the place of customer service representatives. Kiosks and self-service machines are reducing the need for checkout clerks.

Vending machines now sell iPods, bathing suits, gold coins, sunglasses and razors ; some will even dispense prescription drugs and medical marijuana to consumers willing to submit to a fingerprint scan. And shoppers are finding information on touch screen kiosks, rather than talking to attendants. …

The [machines] cost a fraction of brick-and-mortar stores. They also reflect changing consumer buying habits. Online shopping has made Americans comfortable with the idea of buying all manner of products without the help of a salesman or clerk.

During the Great Recession, nearly 1 in 12 people working in sales in America lost their job, accelerating a trend that had begun long before. In 1995, for example, 2.08 people were employed in « sales and related » occupations for every $1 million of real GDP generated that year. By 2002 (the last year for which consistent data are available), that number had fallen to 1.79, a decline of nearly 14 percent.

If, as these examples indicate, both pattern recognition and complex communication are now so amenable to automation, are any human skills immune? Do people have any sustainable comparative advantage as we head ever deeper into the second half of the chessboard? In the physical domain, it seems that we do for the time being. Humanoid robots are still quite primitive, with poor fine motor skills and a habit of falling down stairs. So it doesn’t appear that gardeners and restaurant busboys are in danger of being replaced by machines any time soon.

And many physical jobs also require advanced mental abilities; plumbers and nurses engage in a great deal of pattern recognition and problem solving throughout the day, and nurses also do a lot of complex communication with colleagues and patients. The difficulty of automating their work reminds us of a quote attributed to a 1965 NASA report advocating manned space flight : « Man is the lowest-cost, 150-pound, nonlinear, all-purpose computer system which can be mass-produced by unskilled labor. »

Even in the domain of pure knowledge work—jobs that don’t have a physical component— there’s a lot of important territory that computers haven’t yet started to cover. In his 2005 book The Singularity Is Near: When Humans Transcend Biology, Ray Kurzweil predicts that future computers will « encompass … the pattern-recognition powers, problem-solving skills, and emotional and moral intelligence of the human brain itself, » but so far only the first of these abilities has been demonstrated. Computers so far have proved to be great pattern recognizers but lousy general problem solvers; IBM’s supercomputers, for example, couldn’t take what they’d learned about chess and apply it to Jeopardy! or any other challenge until they were redesigned, reprogrammed, and fed different data by their human creators.

And for all their power and speed, today’s digital machines have shown little creative ability.

They can’t compose very good songs, write great novels, or generate good ideas for new businesses. Apparent exceptions here only prove the rule. A prankster used an online generator of abstracts for computer science papers to create a submission that was accepted for a technical conference (in fact, the organizers invited the « author » to chair a panel), but the abstract was simply a series of somewhat-related technical terms strung together with a few standard verbal connectors.

Similarly, software that automatically generates summaries of baseball games works well, but this is because much sports writing is highly formulaic and thus amenable to pattern matching and simpler communication. Here’s a sample from a program called StatsMonkey : UNIVERSITY PARK — An outstanding effort by Willie Argo carried the Illini to an 11-5 victory over the Nittany Lions on Saturday at Medlar Field. Argo blasted two home runs for Illinois. He went 3-4 in the game with five RBIs and two runs scored.

Illini starter Will Strack struggled, allowing five runs in six innings, but the bullpen allowed only no runs and the offense banged out 17 hits to pick up the slack and secure the victory for the Illini.

The difference between the automatic generation of formulaic prose and genuine insight is still significant, as the history of a 60-year-old test makes clear. The mathematician and computer science pioneer Alan Turing considered the question of whether machines could think « too meaningless to deserve discussion, » but in 1950 he proposed a test to determine how humanlike a machine could become. The « Turing test » involves a test group of people having online chats with two entities, a human and a computer. If the members of the test group can’t in general tell which entity is the machine, then the machine passes the test.

Turing himself predicted that by 2000 computers would be indistinguishable from people 70% of the time in his test. However, at the Loebner Prize, an annual Turing test competition held since 1990, the $25,000 prize for a chat program that can persuade half the judges of its humanity has yet to be awarded. Whatever else computers may be at present, they are not yet convincingly human.

But as the examples in this chapter make clear, computers are now demonstrating skills and abilities that used to belong exclusively to human workers. This trend will only accelerate as we move deeper into the second half of the chessboard. What are the economic implications of this phenomenon? We’ll turn our attention to this topic in the next chapter.

Excerpted from « Race Against The Machine » By Erik Brynjolfsson and Andrew McAfee.